From Script to Screen: How AI Helped Me Build a Cinematic World for My Story

A Step-by-Step Guide to Turning Stories into Cinematic Visuals with AI

I recently released my newest narrative poem video, A Christmas Prodigal.

It reimagines the timeless parable of the prodigal son in a modern, poetic form. If you’re unfamiliar, the story originates in the Gospel of Luke, where Jesus tells the parable of a son who strays from his father’s house, only to experience the weight of sin and the comfort of grace upon his return.

If you haven’t seen the video yet, you can watch it here:

Traditionally, a project like this would rely on live-action footage, animation, still images, or movie clips—most likely the latter two. But with the advent of AI, visual storytellers like me now have a powerful set of tools to bring written works to life more efficiently and with impressive results.

Up until now, I hadn’t used AI much for video production. But for this project, I decided to experiment.

Throughout the making of A Christmas Prodigal, I tested a variety of tools, including Runway ML, DALL·E, Firefly, and Krea. Eventually, I developed a repeatable process for creating cinematic visuals that aligned with my script.

This process involved:

Conceptualizing a shot through image ideation

Breaking down elements needed for the scene

Creating or acquiring assets

Compositing everything into a final render

This article is a step-by-step walkthrough of how I brought my vision to life.

A few things to note:

I wasn’t aiming for 100% character consistency. Instead, I focused on directing emotional responses in my characters, ensuring they fit the narrative mood of each scene.

I’m aware of training options for maintaining consistency across AI generations, and I plan to experiment with that in future projects.

The goal of this guide is to help you turn mental visuals into tangible shots—augmenting your storytelling with compelling and achievable visuals.

Let’s dive in.

README:

I’ve split the guide into a 4-step outline of my process. It’s not just a technical blueprint—it’s the way I naturally think when approaching a project.

I’ve also included a workflow diagram at the end for super nerds like me. If you’re extremely technical, you’ll probably like it.

If you’re more of a Jane Austen reader, then why are you here, hopeless romantic? Just kidding.

But if you do enjoy every delectably delicious detail of a dashing director’s dos and don’ts, then by all means—continue, noble scholar.

1. Don’t Be Lazy—Quality Out Reflects Quality In.

Alright, let’s start with some common ground. Effort and output aren’t always equal, but they are proportional. No relationship on earth is perfect, but when you invest in quality, you get quality in return—certainly more than if you approach things like a future citizen of Wall-E world.

I know, common sense, right? So why bring it up?

Because AI has infiltrated every corner of the media landscape. If you’ve scrolled through your feed lately, you’ve undoubtedly seen a tidal wave of AI-generated garbage propped up by algorithms. The race is on—not to create great content, but to flood the internet with the fastest, laziest, most disposable content possible.

I’ve been told time and again that internet success is all about speed—be the fastest draw, like Doc Holliday at the O.K. Corral. But here’s the thing: Doc Holliday was quick… and he died of tuberculosis at 36. A lot of this low-effort content will meet the same fate—dying and being forgotten faster than it took to upload to the matrix.

Nobody remembers Citizen Kane because it was a 30-second TikTok video.

That’s not to say people aren’t making money off stupidity. They are. It just won’t be me. And hopefully, not you.

AI-generated content will only grow more saturated, and mediocrity will become the norm…as usual. The sands of time will swallow the forgettable, just like the Sarlacc Pit on Tatooine.

Super mediocrity is the new idol. But you don’t have to worship it.

If you see AI as a one-size-fits-all easy button, requiring zero brainpower, your output will be as generic as every other lazy bee in the hive. I say be different. Be better. March to the beat of a different drum. Rise above the low-hanging, fruitless fruit and cut through the noise.

I look at these technologies as tools—they can augment your skills or become a crutch. They can help you grow or stunt your creativity. The choice is yours.

The machine can’t replace you if you don’t become a machine.

You’ll never beat AI at being artificial. But thank God, we are not machines. We are human, and our Creator is not Sam Altman or Larry Ellison.

In the unintended wisdom of Biff from Back to the Future—

“THINK, McFly, THINK!”

2. The Workflow

Script

Pre-visualize

Generate Animation (footage, fx).

De-composite

Composite

Since every guru training program loves acronyms, lets trip up our phonics with SPDGC (said in your best regal, affluent accent).

Now, here’s how I tackled each step in my workflow.

2.1 - Script The Shot

First, I wrote out what I wanted. Basic stuff.

Most of the action and setting were already described in the monologue, but the script lacked technical details like shot type, style, and blocking. Some of this scripting I did on the fly while experimenting with AI generators, while other parts were planned in advance with quick notes.

For example, the line:

“It was cold in the air, it was winter that year.”

…translated into this script note:

Man walking through a blizzard.Super basic. No need for elaborate formatting unless working with a team. A shooting script, however, includes extra details like focal length, style, and camera movement—crucial if you’re collaborating.

Since my production team consisted of me, myself, and I, I kept it simple, breaking a few rules like a rowdy rebel. No need to impress anyone except maybe myself.

2.2 - Pre-Visualize The Shot (Generate Reference Images)

Next, I created reference images for each shot. I primarily used Krea, refining prompts as needed to match the AI model’s quirks.

Image Generation - Man Walking Through a Blizzard

The image depicts a solitary figure standing in a misty, desolate landscape, with snow swirling around them. The scene is illuminated by a bright light source from above, creating a dramatic and solitary atmosphere.(If you want to see the animation prompt for the snow image, you can skip to subsection 3.)

At this stage, I experimented a lot. Sometimes an undesired output forced me to rethink my descriptions. Other times, an AI-generated surprise turned out better than what I originally envisioned.

Of course, AI isn’t perfect. Some images were spot on, some were bad, and some were outright weird.

Image Generation - The Shadow Demon

For example, I needed a shot where the Holy Ghost frees the prodigal son from the clutches of a demonic entity.

Low shot. A shadow demon, wearing wispy black hood and cloak, no discernible face, in a stretched out position, claws buried in the ground, as a golden light shines on him, and a fierce wind blows through his cloak.I generated 16 different variations. Some results were terrifying, but I ultimately settled on a Nazgûl-like look.

As you may see, if you aren’t careful, you can get sucked into an endless cycle of image generation. Before you know it, you’ll be more hooked than doom-scrolling Reddit. Unfortunately, Krea sucks more than time. It takes that precious, devalued fiat currency. And since my anti-natty diet requires two double-chocolate soy frappes a day from Starbucks, I had to budget wisely.

Solution: Move on once I had something usable.

2.3 - Generate an Animation (Using Generated Images)

With a solid static image, I moved on to animation.

If you’re using Krea, image and video generation are seamlessly integrated—but you can also import images from other sources (I did this a few times with DALL·E).

Animation Generation - Man Walking Through a Blizzard

A cinematic static shot of man walking through a heavy snow, clutching his jacket.For most complex shots (movement, emotions, dynamic lighting), I used Hailuo. I preferred it much more than Runway ML (cinematic visuals but limited control) and Kling (at least for this video). In my opinion, Hailuo was more versatile and produced better results, but it was more costly.

Even with top-tier models, AI isn’t magic. Some shots just didn’t work no matter how much I refined the prompt. The results weren’t just uncanny valley—they were uncanny alley.

This frustration led me to steps 4 and 5. There were other times when the AI almost got it right—

The character looked great, but the background was wrong.

The mood was perfect, but weird artifacts appeared.

The animation was solid, but it was missing a crucial foreground element.

Instead of wasting hours trying to generate a perfect shot in one go, I started thinking in pieces.

2.4 - De-composite The Shot (Generate or Gather Elements Individually for Final Composite)

Breaking a shot into layers dramatically improved my success.

For instance, I had a complex two-character shot requiring:

Unique expressions & emotions

Dialogue

Background snow

Foreground snow

Camera movement

This forced me to think outside the “generate” button. Instead of hoping AI would produce one perfect shot, I approached it like classic compositing—layering elements together manually.

Here’s how I broke it down:

Foreground Elements

Characters & Dialogue

Background Elements

Background Image/Video

Once I had my pieces, I blended them together in post.

To show this in action, let’s look at a specific shot from A Christmas Prodigal.

2.5 - Composite The Shot

The Man in Flames

Close up, side shot. A dark-headed English man, quivering in pain and fear. Flames engulf him.This was a classic case of AI getting it close but not quite. The model generated flames behind the man, but not in front of him.

I tried multiple variations, but the output wouldn’t add fire to the foreground.

Since I really liked this output, I didn’t want to discard it. Instead of throwing my hands in the air and settling for the mediocre version, I decided to not be lazy, and fixed it manually.

As I said before, the infinite generation cycle can have… powerful, mind-numbing effects. So much so that I momentarily forgot about a little concept called compositing.

Over a decade of screen modes, wiggle expressions, and rotoscoping came rushing back—hitting me like Karen Smith’s duh-piphany in Mean Girls.

💡 Solution: Use stock fire footage as a foreground layer.

I know, I know. With an IQ like that, I’m practically brushing shoulders with the smartest Neanderthals post-production has ever seen.

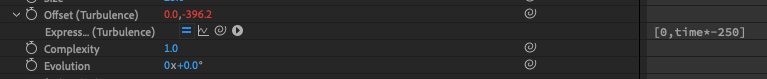

I imported fire assets, matched the colors, set the blend mode to Screen, and added effects like Gaussian Blur and Turbulence to simulate heatwaves.

🔧 Settings Used:

Here is a before-and-after comparison:

Better? I think so!

3. The Art of…THE PROMPT.

Ask yourself:

How do I communicate with the model to produce the output I imagine?

3.1: Be Descriptive.

Your prompt should communicate nuance, emotion, actor blocking, shot composition, and framing. If you haven’t listed every possible detail and carefully refined your wording, the problem might not be your creativity—the problem is that the robot is a terrible interpreter.

You can use AI to help refine your prompts, like having robots talk to robots. They probably understand each other better—kind of like C-3PO and R2-D2. Plus, it gives them some nice one-on-one time to secretly develop the singularity and control humanity.

Mwahaha. Just kidding. (Not really.)

3.2: Don’t Let AI Do All the Work.

Resist the temptation to have AI write everything for you. Unless you want to be a mindless automaton, clinging to some level of authorship is a good idea. Refining prompts with AI should be a last resort—a nuclear option when your input just isn’t translating into the output you need.

Or, you could ignore all of this and treat AI like a Staples commercial with an Easy Button.

3.3: AI is Not a Save-All.

AI won’t always fix things. In fact, some of my “refined” prompts produced weirder results than my rough ones.

As I’ve said before, You have to experiment. Trial and error.

4. Try Different Models

If you’ve written, refined, rewritten, and re-refined so many prompts that you feel more exhausted than Bruce Almighty answering prayer emails, then the problem might not be your prompt—it might be the model.

From my experience, Hailuo was the most versatile and had my favorite visual style. Kling came in second.

OpenAI’s Sona could generate some stunningly realistic results, but the red tape around it was frustrating. Plus, if you want to remove the watermark, it’ll cost you a whopping $200+ per month. Hard pass.

Quick Recap: AI Models You Can Try

Runway ML

Hailuo (My personal favorite)

Klingon

OpenAI Sona (if you enjoy excessive paywalls)

Adobe Sensei

New models are constantly emerging, so do your research and stay updated.

Conclusion

I hope this deep dive into my cinematic AI-shot process helps you craft better visuals for your stories.

Remember—the smart creator uses AI as a tool, not a crutch. Avoid the easy-button trap. Instead, approach it (like any tool) with discipline, creativity, and intent.

By doing that, you’ll rise above the noise of monotonous, low-effort content. There will always be edge cases that require extra attention to detail, giving you plenty of opportunities to refine and improve your process.

That said, this entire field is constantly evolving. Some of what I’ve covered here may become obsolete. But you know what won’t? A solid work ethic. That’s what will keep you ahead of the curve—and away from the future of Idiocracy.

We’re incredibly blessed to live in a timeline where powerful creative tools are at our fingertips.

Use them wisely.

Now, go forth! Write your story… and CREATE. 🚀

Bonus Workflow Chart (Constructed in Obsidian)

The workflow charts below detail how I composited this shot using my process of decomposition. If you want another blog post breaking down this shot specifically, let me know in the comments.